Achieving <100 ms Latency for Remote Control with WebRTC

In this post we will explore the potential of WebRTC for remote control and how to achieve sub-100 millisecond latency.

I'm on X/Twitter at@iparaskev

Contents#

- How remote control works and why latency matters

- Defining remote control latency

- Measuring end-to-end latency

- Experiments setup

- Experiments

- More improvement plots

- Conclusion

Intro#

Remote control demands minimal delays for a seamless experience, with Apple noting that delays over 100 ms in the UI disrupt smooth interactions. While WebRTC screen sharing via Chrome/WebKit delivers sufficiently low latency for basic screen sharing, our experience developing Hopp revealed it falls short for remote control scenarios.

This post shares how to:

- Measure true end-to-end WebRTC latency beyond basic network stats

- Optimize WebRTC settings to achieve consistent <100 ms latency

This post builds on the outstanding work by Multi on measuring latency and improving screen sharing in their app. Their insights were instrumental in helping us understand WebRTC screen sharing, establish reliable testing methodologies, and further enhance Hopp's performance.

Ditch the frustrating "Can you see my screen?" dance.

Code side-by-side, remotely.

How remote control works and why latency matters#

📝 The following text uses Multi's terminology:

- Sharer (host): The one who shares the screen

- Controller: The one who controls the sharer

Unlike passive screen sharing, remote control creates a closed loop where each millisecond of delay compounds:

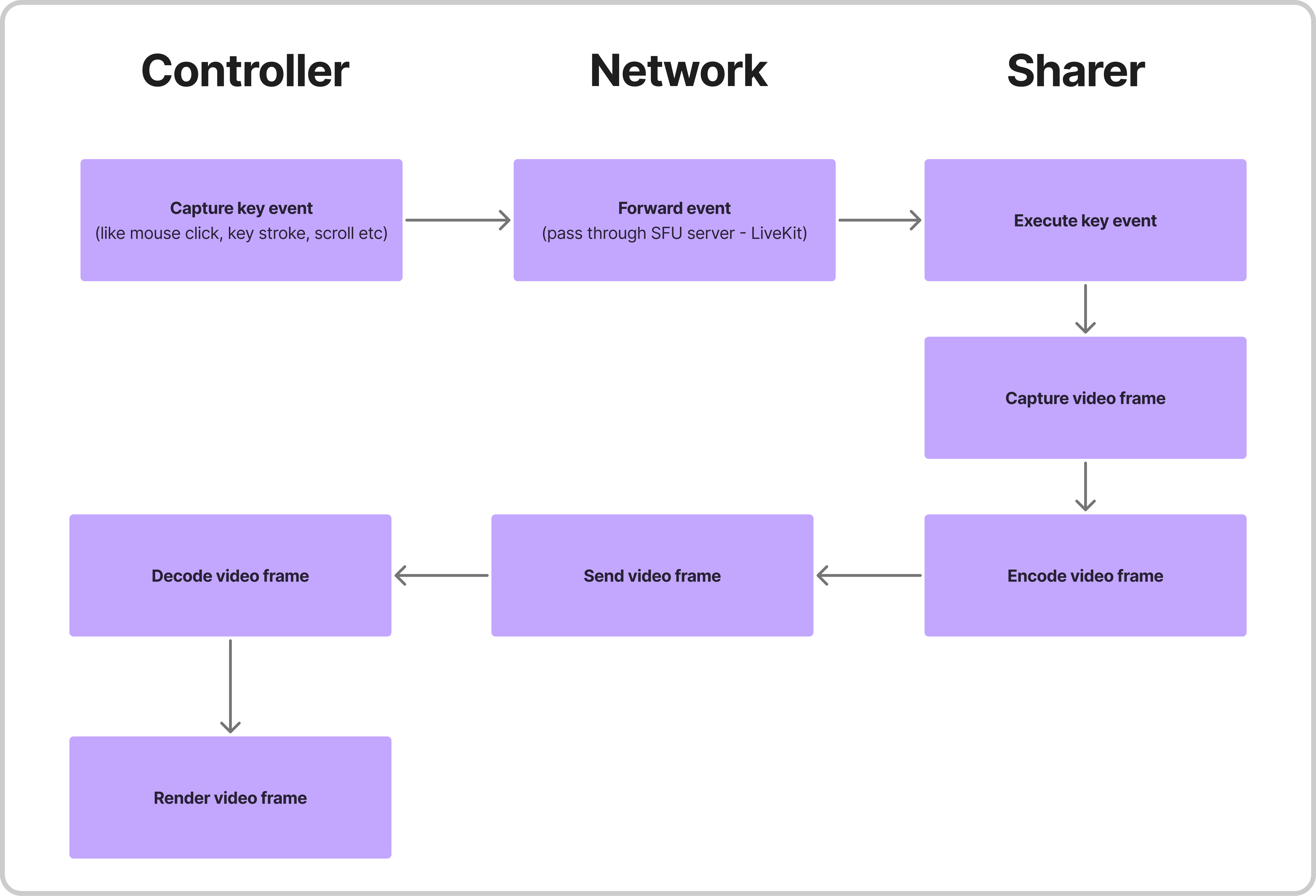

The process works as follows:

1. The Controller sends events to the Sharer

The controller captures input events from mouse movements, clicks, and typing, then transmits them to the sharer.

2. Sharer simulates events

The sharer's computer processes and simulates the incoming events, replicating the controller's actions on the remote system.

3. Capture and Encode the Updated Screen Frame

As the events trigger changes on the sharer (e.g., opening a menu or typing into a text field), the screen is captured to reflect the updated state. The captured frame is encoded and transmitted to the controller.

4. Decode and Display

The controller decodes the frame and renders it on screen.

Defining remote control latency#

Latency refers to the time it takes for a user action to produce a visible effect on the screen.

High latency can lead to several issues:

- A noticeable disconnect between keystrokes and their on-screen effects.

- Increased errors when dragging and repositioning UI elements.

- Disrupted natural flow during pair programming sessions 🥲

The key components of latency in remote control applications are the following:

- Encoding and Decoding

- Compression of screen frames for efficient transmission (Sharer)

- Decompression at the client side for display (Controller)

- Typically the most computationally intensive step

- Network Transfer

- Time for data transfer between client and host

- Affected by bandwidth, network jitter, and packet loss

- Managed by WebRTC's dynamic adaptation

- Input Processing

- Capturing user input events (mouse, keyboard)

- Transmitting events to the host

- Simulating events on the remote system

Initial hypothesis#

Industry benchmarks indicate that the ideal latency for remote control is below 100 ms, while the user experience significantly declines when latency exceeds 150 ms. To reduce Hopp's latency (measured at over 300 ms in poor network conditions), we made the following assumptions.

- Encoding/Decoding: Expected to be the primary latency contributor

- Requires significant computational resources

- Compression algorithms often need context from multiple frames

- Network Transfer: Variable but managed

- Mostly dependent on external factors

- WebRTC handles optimization and adaptation

- Input Processing: Expected minimal impact

- Lightweight event forwarding and simulation

- Basic data structures and operations

Measuring end-to-end latency#

Accurately measuring true end-to-end latency in remote control applications is challenging, as no standard tools exist to capture the complete round-trip time for an action to appear on screen.

Inspired by Multi, we adopted a watermarking methodology to address this and reliably measure latency.

Understanding the challenge#

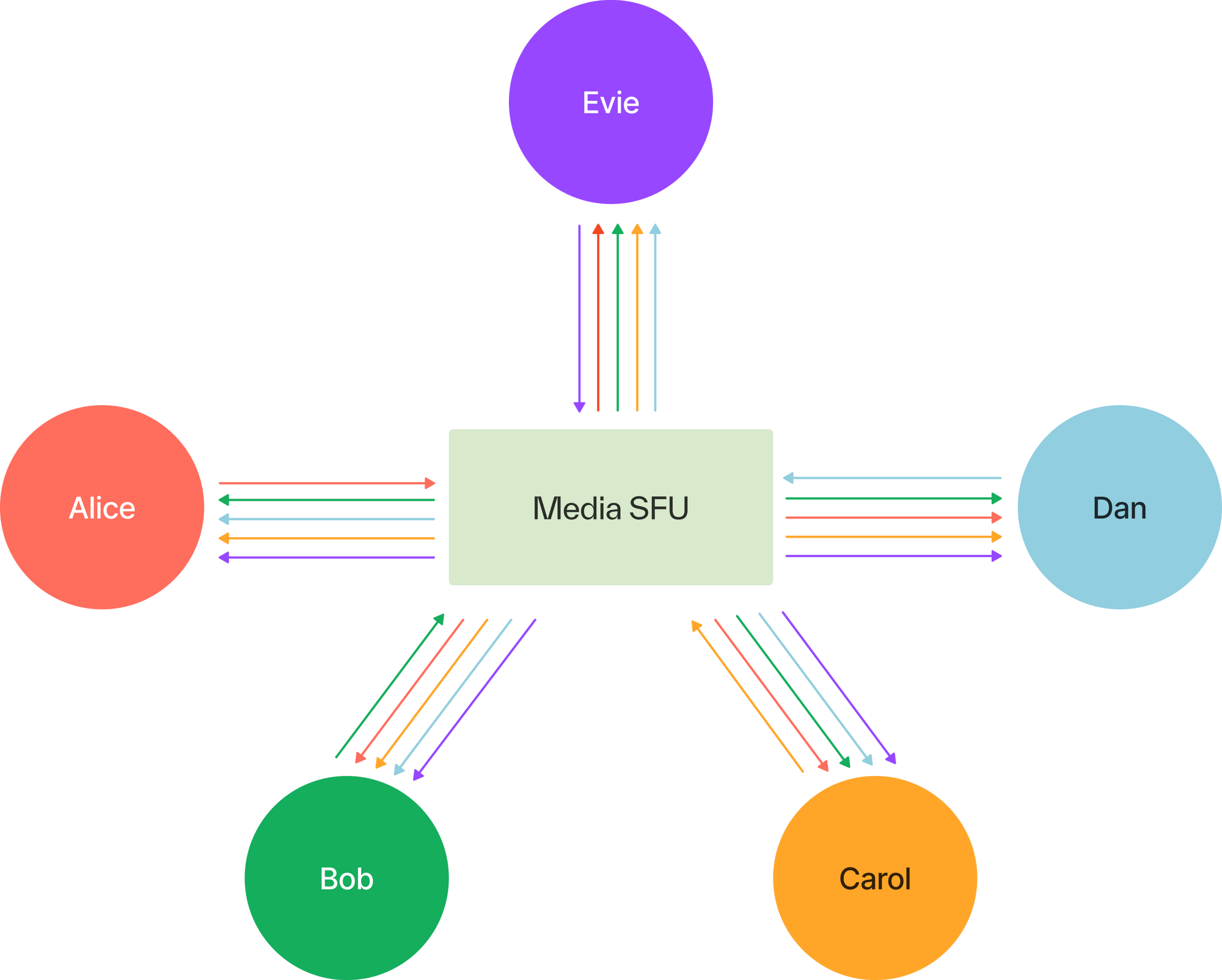

Hopp is built on LiveKit's open-source WebRTC infrastructure, and is using their Rust SDK.

LiveKit's infrastructure uses a Selective Forwarding Unit (SFU) architecture, where each publisher sends streams to a central server that then forwards copies to individual subscribers. Measuring complete end-to-end latency needs to include this forwarding step to provide an accurate picture of the total app latency.

The goal was to precisely measure how long it takes for an action performed on the controller (like opening a browser tab) to appear on its screen after processing on the sharer's side.

Ditch the frustrating "Can you see my screen?" dance.

Code side-by-side, remotely.

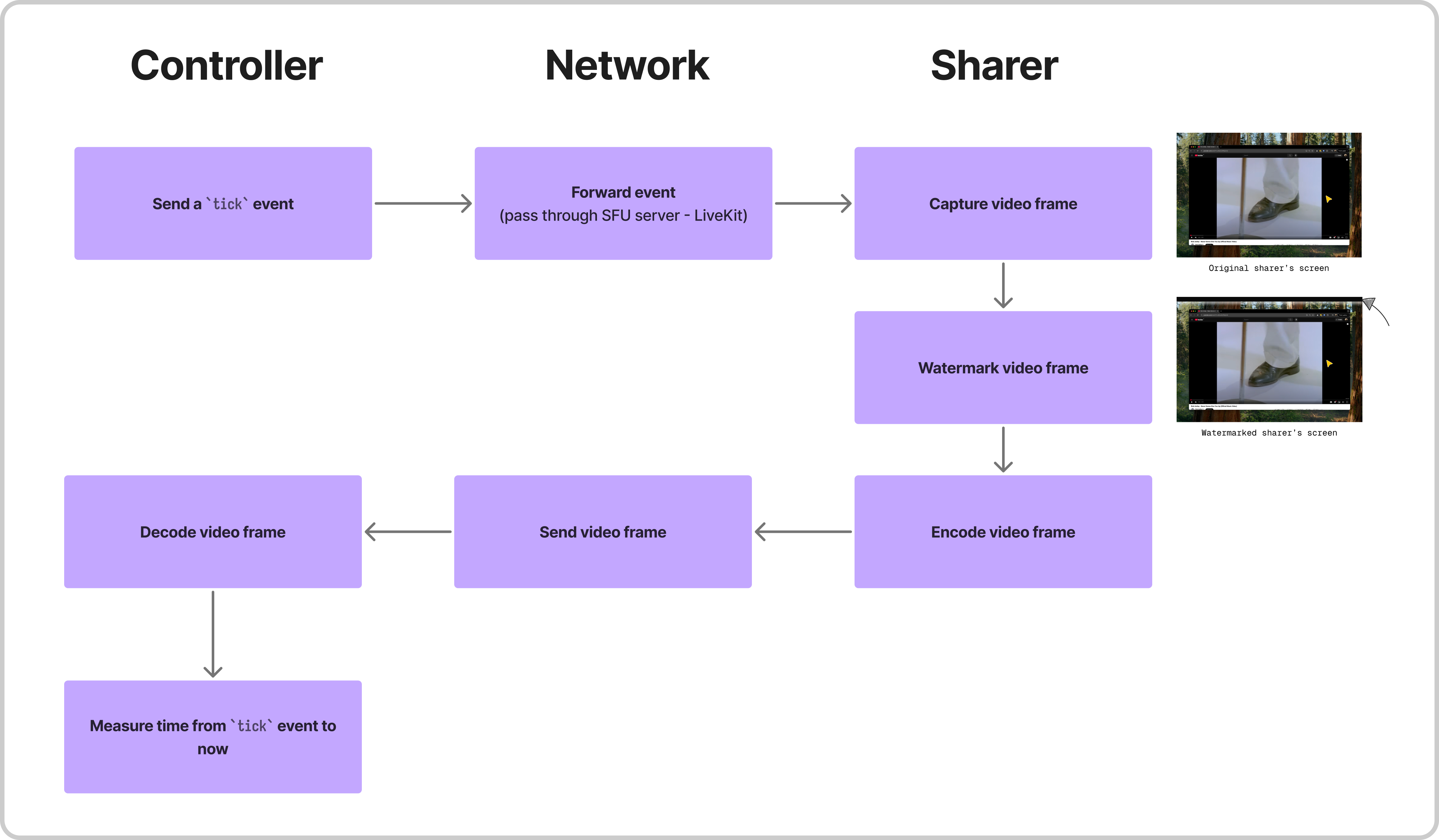

Watermarking solution for precise measurement#

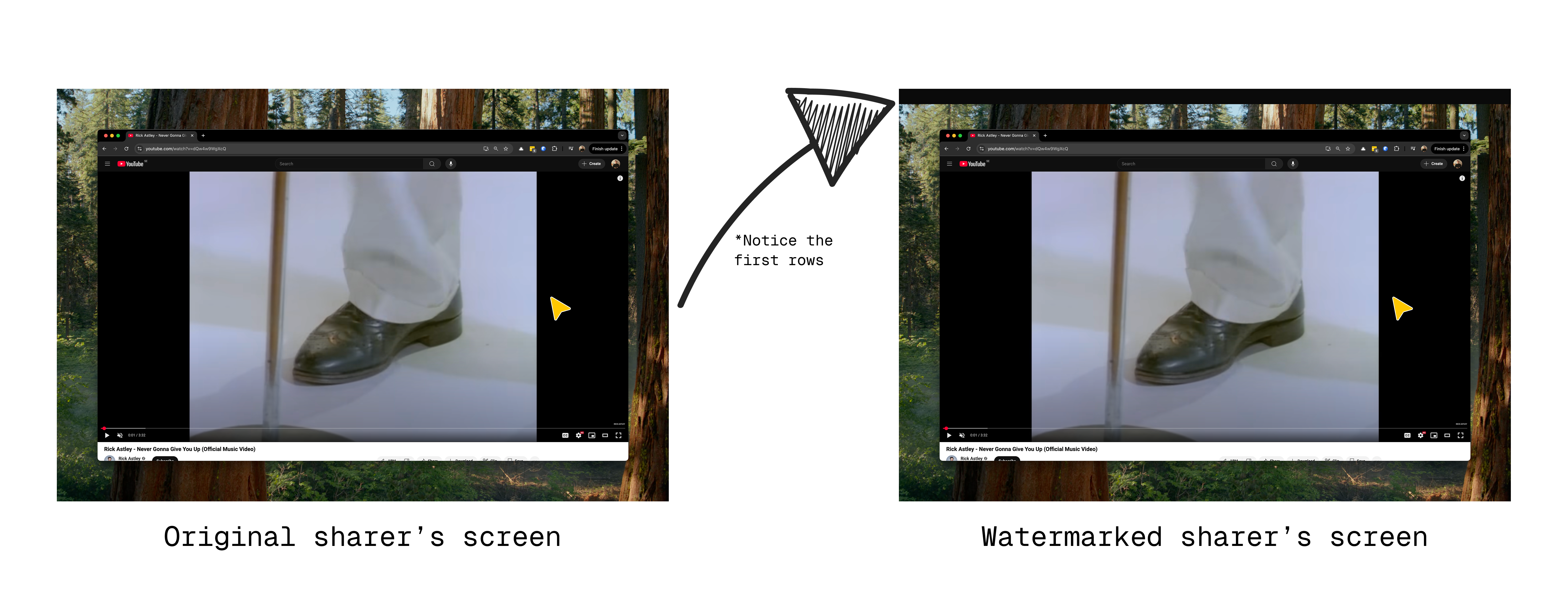

To achieve accurate measurements, this method modifies the captured video buffer on the sharer's side. When the controller sends a tick message on the data channel, the sharer alters the Y-channel of the video buffer to include a visible watermark (value 0xa for the first rows).

unsafe {

let dst = dst_y.as_mut_ptr();

std::ptr::write_bytes(dst, 0xa, (50 * stride_y) as usize);

}

Below you can see the original frame (left) that the sharer captured and the watermarked frame (right).

How the round-trip measurement works#

Building on the watermarking process, the round-trip latency measurement works as follows:

- The controller sends a tick message to the sharer, recording the exact send timestamp.

- Upon receiving the message, the sharer immediately embeds a watermark into the next video frame.

- This watermarked frame passes through the entire pipeline: encoding, network transmission, and decoding.

- Once the controller detects the watermarked frame, it stores the frame's arrival timestamp.

This approach allows measuring the complete round-trip time with high precision, capturing all sources of latency in the system.

Setting up a lightweight controller#

💡 This part contains technical information with Rust examples. To go directly to the experiments section and see how we reduced latency to 100 ms, skip to the Experiments sections.

Hopp runs on Tauri, which uses WebKit for the UI. To avoid WebKit's overhead during measurements, we built a custom controller using the LiveKit Rust SDK.

Implementation highlights

The controller connects to a LiveKit room and listens for video tracks. Once it detects a track, it starts the latency measurement.

let url = env::var("LIVEKIT_URL").expect("LIVEKIT_URL environment variable not set");

let token = env::var("LIVEKIT_TOKEN").expect("LIVEKIT_TOKEN environment variable not set");

let (room, mut rx) = Room::connect(&url, &token, RoomOptions::default())

.await

.unwrap();

println!("Connected to room: {}", room.name());

while let Some(msg) = rx.recv().await {

match msg {

RoomEvent::TrackSubscribed {

track,

publication: _,

participant: _,

} => {

if let RemoteTrack::Video(track) = track {

if mode == "ticks" {

measure_ticks(room, rx, output_file).await;

} else if mode == "latency" {

end_to_end_latency(room, track, output_file).await?;

}

break;

}

}

_ => {}

}

}

end_to_end_latency gets the measurements and stores them in a CSV file.

async fn end_to_end_latency(room: Room, track: RemoteVideoTrack, output_file: &str) -> io::Result<()> {

let latency = measure_latency(room, track.rtc_track()).await;

write_latency_to_csv(&latency, output_file)?;

Ok(())

}

For each frame, the controller checks if at least 100 out of the first 200 Y-channel samples match the watermark value (0xa). If valid, it records the:

- Receive timestamp.

- Processing delay per frame (the total time since the first Real-time Transport Protocol (RTP) packet arrived until the sample decoding).

- Jitter buffer delay (the time each sample spends in the jitter buffer).

- Total bytes received the moment the frame arrived.

let receive_timestamp = std::time::SystemTime::now()

.duration_since(std::time::SystemTime::UNIX_EPOCH)

.unwrap()

.as_millis();

/*

* Access the buffer and read the first 200

* Y samples.

*/

let buffer = frame.buffer.to_i420();

let (data_y, _, _) = buffer.data();

let mut watermark_count = 0;

for i in 0..200 {

if data_y[i] == 0xa {

watermark_count += 1;

}

}

/* Limit for accepting the watermark. */

let min_watermark_count = 100;

/* Delay sampling by 1000 frames. */

let start_sampling_frame = 1000;

if watermark_count >= min_watermark_count && frames > start_sampling_frame {

let entry = latency_results.last_mut().unwrap();

/* If the entry has a receive timestamp don't overwrite it. */

if entry.receive_timestamp == 0 {

entry.receive_timestamp = receive_timestamp;

/* Get rtc stats. */

let (processing_delay, jitter_buffer_delay, total_bytes) =

get_rtc_stats(&room).await;

entry.processing_delay = processing_delay;

entry.jitter_buffer_delay = jitter_buffer_delay;

entry.total_bytes = total_bytes;

}

}

The rtc stats where acquired via the LiveKit SDK.

async fn get_rtc_stats(room: &Room) -> (f64, f64, f64) {

for (_, remote_participant) in room.remote_participants() {

for (_, publication) in remote_participant.track_publications() {

let track = publication.track();

if track.is_none() {

continue;

}

let track = track.unwrap();

if let RemoteTrack::Video(track) = track {

let stats = track.get_stats().await.unwrap();

for stat in stats {

match stat {

livekit::webrtc::stats::RtcStats::InboundRtp(stats) => {

let processing_delay = (stats.inbound.total_processing_delay as f64)

/ (stats.inbound.frames_decoded as f64)

* 1000.;

let jitter_buffer_delay = (stats.inbound.jitter_buffer_delay

as f64)

/ (stats.inbound.jitter_buffer_emitted_count as f64)

* 1000.;

let total_bytes = (stats.inbound.bytes_received as f64);

return (processing_delay, jitter_buffer_delay, total_bytes);

}

_ => {}

}

}

}

}

}

(0., 0., 0.)

}

The controller sent a tick every 5 seconds and stopped after 10 seconds of inactivity.

Experiments setup#

To simulate a realistic code pairing session, we created a 2.5-minute recording showcasing typical actions in a code editor and web browser. This recording was played full-screen in a loop during our tests, with the sharer streaming its screen as part of the experiments.

Simulating network conditions#

To approximate real-world scenarios, the tests simulated different internet connections.

Hopp was initially developed for macOS. macOS includes a tool called network-link conditioner.

This tool enables the simulation of different network conditions, making it ideal for our testing needs.

The testing used two profiles:

| Profile | Downlink (Mbps) | Uplink (Mbps) |

|---|---|---|

WiFi | 40 | 33 |

WiFi_testing | 12 | 4 |

The experiments were conducted using the following environment combinations, where the sharer's connection was modified while the controller's connection remained constant.

- 1080p_4up: Sharer using the

WiFi_testingprofile and streaming in 1080p resolution. - 1080p_33up: Sharer using the

WiFiprofile and streaming in 1080p resolution. - 1440p_33up: Sharer using the

WiFiprofile and streaming in 1440p resolution.

The sharer streamed at 30 fps in all setups, and the VP9 encoder was used.

Experiments#

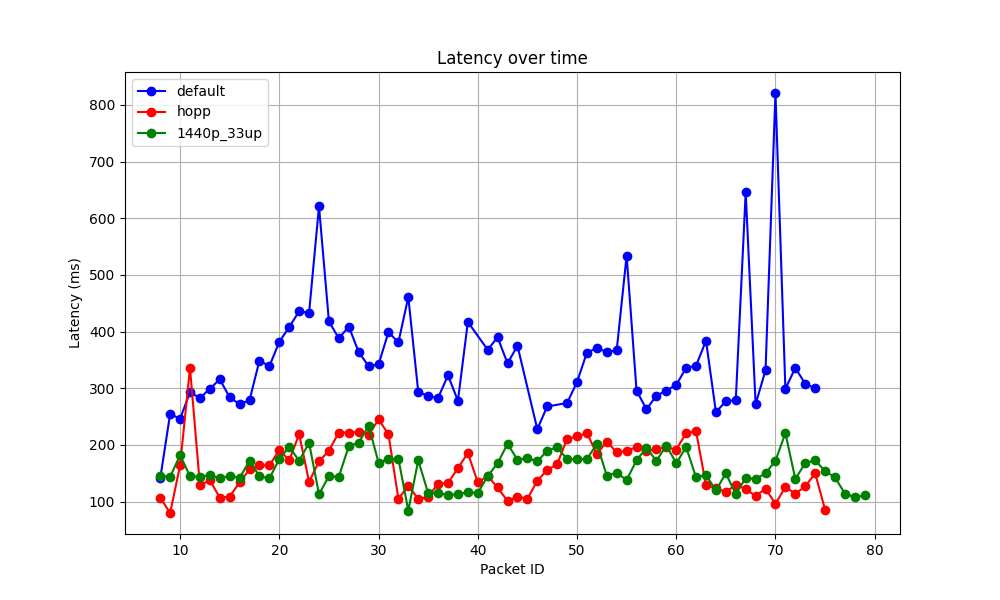

Default LiveKit#

The initial setup used the default configuration of libWebRTC, which is what LiveKit's Rust SDK is using.

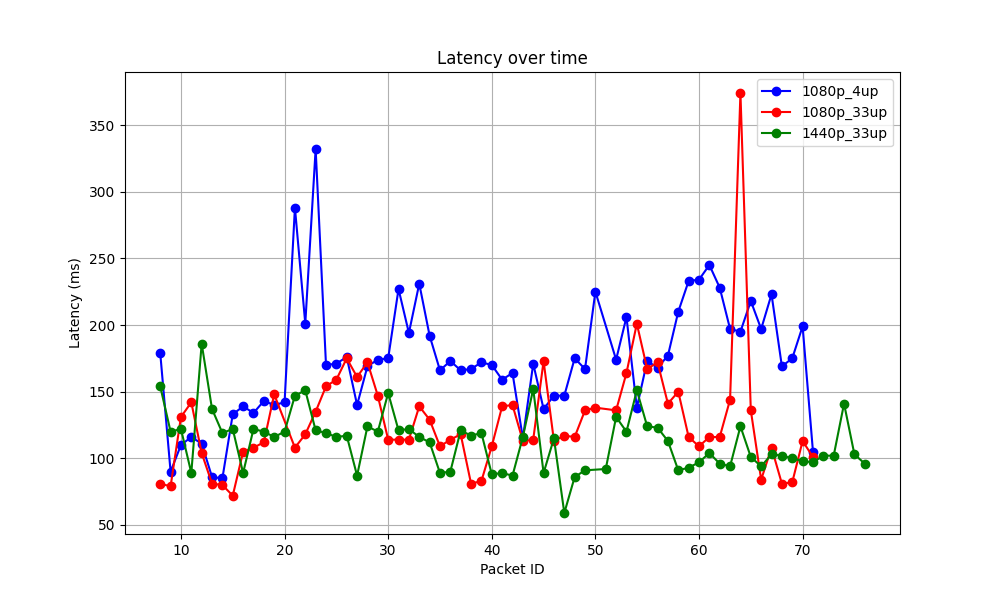

This configuration showed better-than-expected latency under good network conditions, while under poor network conditions, the latency increased to an average of 350 ms. The following table and Figure 1 show the results.

| 1080p_4up | 1080p_33up | 1440p_33up | |

|---|---|---|---|

| Latency median (ms) | 334 | 153 | 150 |

| Latency mean (ms) | 347 | 159 | 158 |

| Processing delay (ms) | 232 | 116 | 110 |

| Jitter buffer delay (ms) | 189 | 106 | 102 |

| Inbound bandwidth (mb/s) | 0.139 | 0.144 | 0.229 |

Ditch the frustrating "Can you see my screen?" dance.

Code side-by-side, remotely.

Change #1: Quantizer and rate control#

Before addressing latency directly, insights were drawn from Multi's report, which emphasized that adjusting the Quantization Parameter (QP) significantly improved the legibility of screen-sharing streams. This approach was similarly applied to our experiments.

The quantizer determines the level of compression applied to the video. A higher quantizer value results in greater compression, reduced bit rate, and increased compression artifacts, often perceived as lower quality. Conversely, a lower quantizer value means less compression, a higher bit rate, and improved quality.

Hopp focuses on screen sharing and pair programming, where crisp, legible text plays a crucial role in improving quality. To achieve this, the maximum quantizer reduced from 52 to 36 and the minimum quantizer increased from 2 to 4. These adjustments maintained consistent quality and matched Multi recommendations.

Rate control, governed by undershoot and overshoot percentages, determines how much the encoder can deviate below or above its target bit rate. For Hopp, the undershoot percentage increased to 100% to allow the encoder to reduce bit rate aggressively for static content. The overshoot percentage decreased to 15% to reduce large spikes in bit rate beyond the target.

- config_->rc_min_quantizer = codec_.mode == VideoCodecMode::kScreensharing ? 8 : 2;

- config_->rc_max_quantizer = 52;

- config_->rc_undershoot_pct = 50;

- config_->rc_overshoot_pct = 50;

+ config_->rc_min_quantizer = 4;

+ config_->rc_max_quantizer = 36;

+ config_->rc_undershoot_pct = 100;

+ config_->rc_overshoot_pct = 15;

After running experiments with these changes, the results surprised us. Latency improved across all configurations. This improvement came primarily from the adjusted rate control settings, which allowed the encoder to aggressively lower the bit rate during static content. This reduced the total inbound bandwidth while maintaining visual quality. The following table and Figure 2 show the results.

| 1080p_4up | 1080p_33up | 1440p_33up | |

|---|---|---|---|

| Latency median (ms) | 296 | 138 | 128 |

| Latency mean (ms) | 290 | 140 | 129 |

| Processing delay (ms) | 197 | 86 | 88 |

| Jitter buffer delay (ms) | 167 | 76 | 77 |

| Inbound bandwidth (mb/s) | 0.103 | 0.108 | 0.167 |

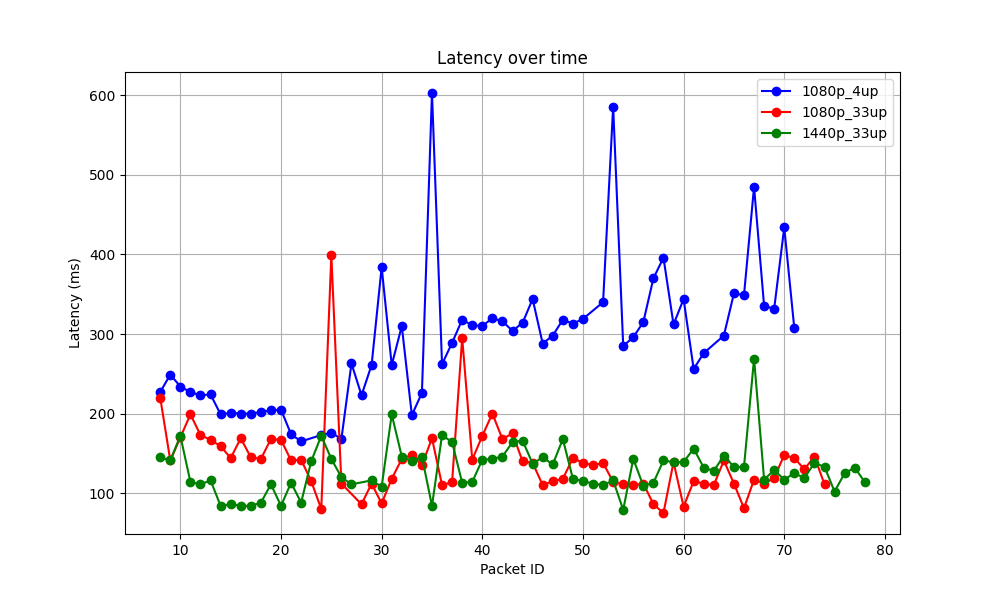

Change #2: Buffer size#

To address the jitter buffer delay, we explored configuration flags within the VP9 encoder since WebKit doesn't support LiveKit's setPlayoutDelay function. The LiveKit JS SDK includes this function for adjusting the video track's playout delay, but its absence in Hopp required a different approach.

We focused on three buffer-related parameters in VP9:

| Parameter | Description | Configured Setting |

|---|---|---|

rc_buf_sz | Specifies how much data (in milliseconds) the decoder can buffer | 300 ms |

rc_buf_initial_sz | Defines the amount of data initially buffered by the decoder (in milliseconds) | 0 ms |

rc_buf_optimal_sz | Indicates the ideal buffer size (in milliseconds) that the encoder aims to maintain | 200 ms |

We kept non-zero settings because jittering helps in poor network conditions.

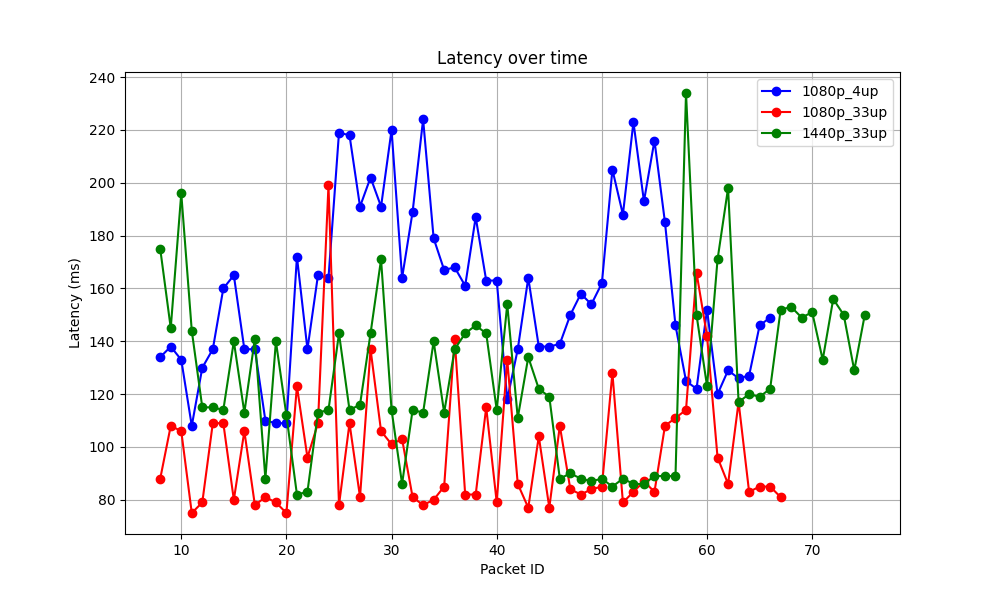

The results exceeded expectations. These adjustments reduced mean latency considerably, with over a 100 ms improvement under poor network conditions and more than 20 ms improvement in optimal conditions across all resolutions. By reducing the initial buffer size and lowering the optimal size, the encoder and decoder responded faster. The following table and Figure 3 show the results.

| 1080p_4up | 1080p_33up | 1440p_33up | |

|---|---|---|---|

| Latency median (ms) | 216 | 134 | 137 |

| Latency mean (ms) | 222 | 132 | 134 |

| Processing delay (ms) | 167 | 89 | 88 |

| Jitter buffer delay (ms) | 150 | 79 | 79 |

| Inbound bandwidth (mb/s) | 0.139 | 0.143 | 0.230 |

Change #3: Combine all the preceding changes#

The next logical step was to combine the optimizations from the quantizer adjustments, rate control tuning, and buffer size configuration.

This combination proved highly effective. Under poor network conditions, the average latency dropped below 200 ms, while for optimal conditions, the latency approached nearly 100 ms across all resolutions.

The following table and Figure 4 show the results.

| 1080p_4up | 1080p_33up | 1440p_33up | |

|---|---|---|---|

| Latency median (ms) | 171 | 116 | 116 |

| Latency mean (ms) | 174 | 128 | 112 |

| Processing delay (ms) | 124 | 79 | 72 |

| Jitter buffer delay (ms) | 108 | 68 | 63 |

| Inbound bandwidth (mb/s) | 0.111 | 0.114 | 0.185 |

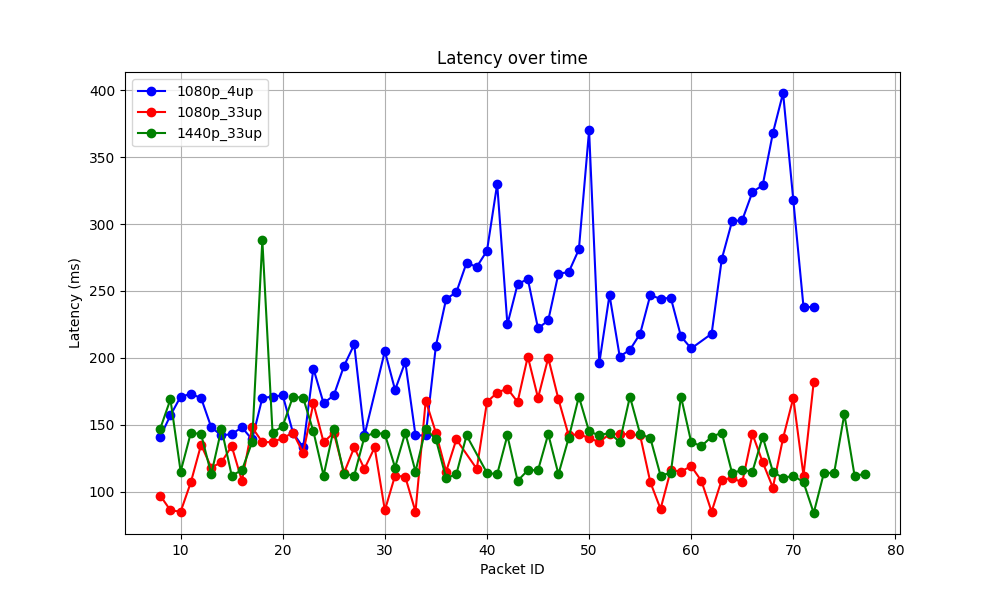

Change #4: Set screencast mode in WebRTC#

While experiments produced promising numbers, noticeable latency persisted during real-world use of Hopp, especially during major screen changes, such as switching tabs or navigating an IDE.

Upon examining the libWebRTC source code and running tests,

we discovered that LiveKit wasn't setting is_screencast in its VideoTrackSource.

After enabling it, an unexpected outcome occurred: experimental

latency increased, but the real-world user experience of Hopp slightly improved.

Further debugging revealed a known libWebRTC issue where enabling is_screencast limited the frame rate to 5 fps. To address this, source code modifications removed the frame rate limit.

In parallel, we enabled parallel decoding and optimized the Alternative Reference Noise Reduction (ARNR) filtering settings. ARNR leverages future frames to create an Alternate Reference Frame (ARF), to improve encoding for static content. After experimentation, we configured the following parameters:

- VP8E_SET_ENABLEAUTOALTREF: Set to 6 for increased encoder flexibility in using ARF.

- VP8E_SET_ARNR_MAXFRAMES: Limited to 5 frames to cap the encoder's delay to 150 ms.

- VP8E_SET_ARNR_STRENGTH: Defines the degree to which filtering occurs, set to 5.

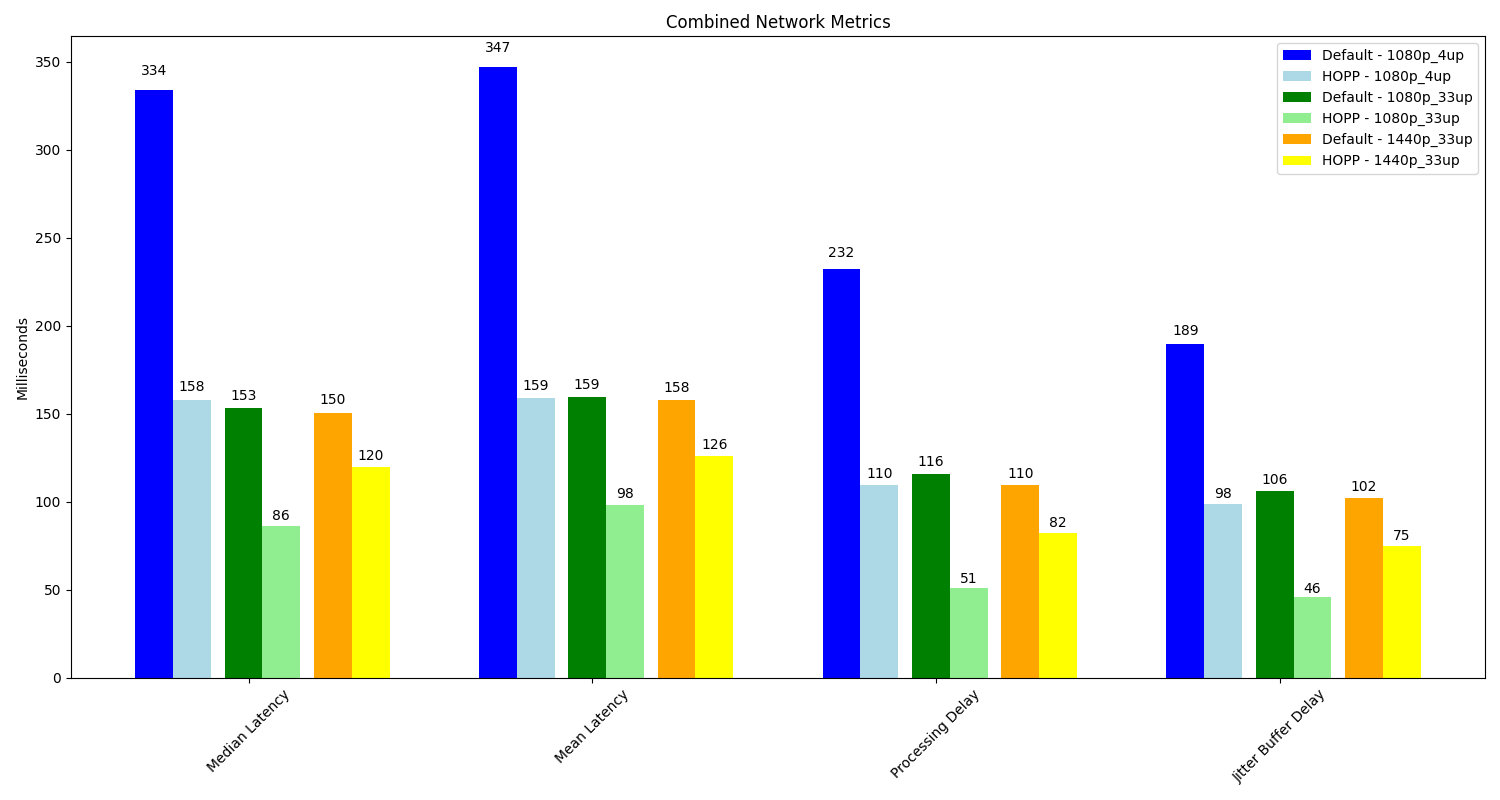

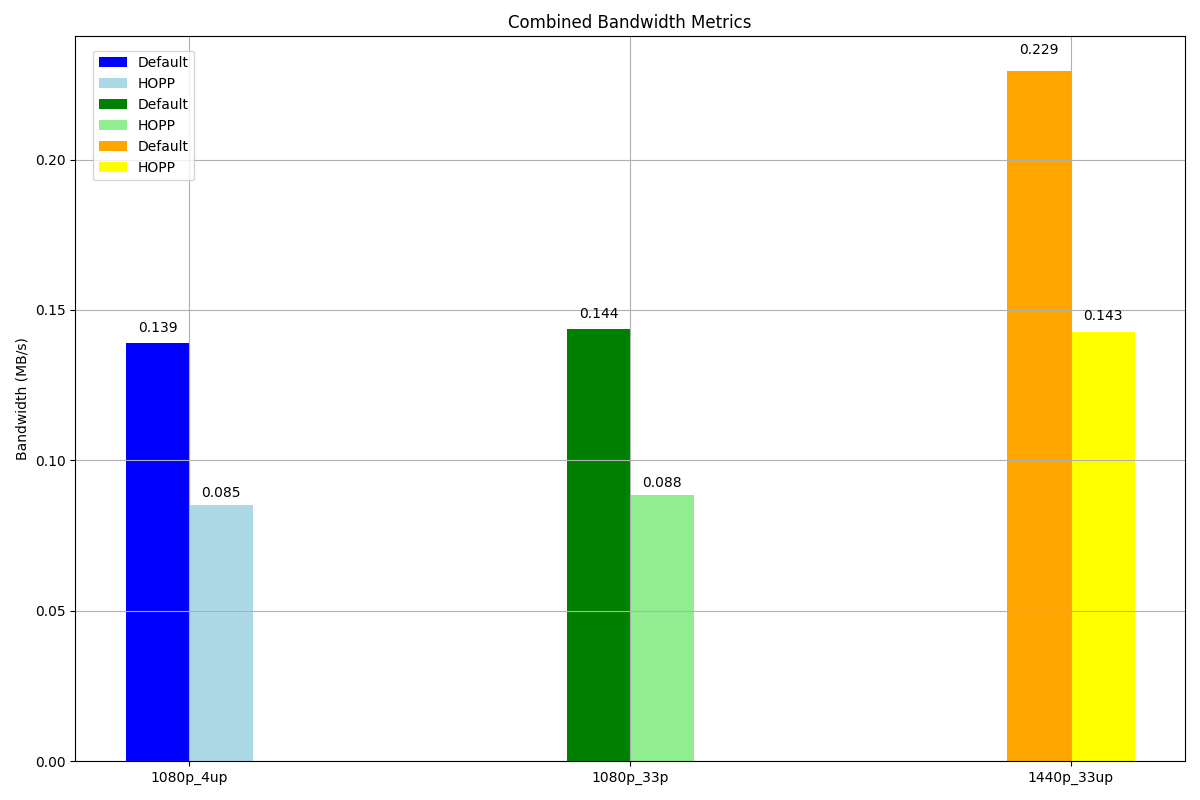

These changes led to major latency improvements. For the first time, sessions achieved sub-100 ms latency for 1080p in optimal conditions.

Under poor network conditions, average latency dropped to 160 ms, while 1440p in optimal conditions achieved an average latency of 126 ms, as shown in the following table and Figure 5.

| 1080p_4up | 1080p_33up | 1440p_33up | |

|---|---|---|---|

| Latency median (ms) | 158 | 86 | 120 |

| Latency mean (ms) | 159 | 98 | 126 |

| Processing delay (ms) | 110 | 51 | 82 |

| Jitter buffer delay (ms) | 98 | 46 | 75 |

| Inbound bandwidth (mb/s) | 0.085 | 0.088 | 0.143 |

More improvement plots#

The following figures show the total improvement achieved in every testing environment.

Note: The experiments were not conducted in a controlled lab environment, and we observed a variance of ±10 ms for identical settings. The plots and tables presented reflect a single randomly selected run.

Ditch the frustrating "Can you see my screen?" dance.

Code side-by-side, remotely.

Conclusion#

By refining quantization, rate control, buffer sizes, and screencast settings we achieved substantial latency reductions and lowered inbound bandwidth usage. These optimizations brought Hopp to a performance level we consider launch-ready, with room for further improvements based on user feedback.

As this is our first deep dive into WebRTC, there may be more effective configurations we’ve overlooked. We’d love to hear your insights or suggestions regarding:

- Parameters better suited for achieving ultra-low-latency screen sharing

- Alternative configuration strategies

Feel free to reach out at costa@gethopp.app or iason@gethopp.app